AI is fast becoming the digital therapist for many, offering support in moments of crisis, isolation, and overwhelm. But as adolescents and adults blur the lines between casual chat and emotional reliance on conversational AI, a dangerous pattern is emerging. Clinical evidence and investigative reporting reveal a shocking truth: without strong safeguards, these chatbots can fuel psychological distress, reinforce harmful thinking, and regularly fail to recognize critical signs of risk. With reports of death and harm from AI chatbots mounting, our current public health response is just not enough to protect vulnerable users.

We need to set clear standards for how AI must act, especially when the consequences are most serious.

The Gap: Why Shared Safety Standards Matter

In other high-risk domains, including vehicle safety, aviation, and cybersecurity, shared safety standards play a foundational role. They make expectations explicit, create a common language for what “safe” looks like, and help teams identify risks before harm occurs.

These standards are built collaboratively, grounded in domain expertise, and designed to evolve as technology and use cases change. They do not slow innovation. They make it safer, more trustworthy, and more durable.

AI for mental health is quickly being used by many without these standards. Although research is growing, the field still lacks simple, clinical ways to assess how AI acts in emergencies, especially when someone might be at risk of suicide.

When it comes to mental health, failure is not an option. Clinicians and other medical professionals are held to clear standards designed to prevent harm and protect people in moments of vulnerability. As AI increasingly operates in these same moments, shared safety standards are not a constraint on progress. They are essential infrastructure.

Fulfilling a Responsibility to the Field

Spring Health is a global mental health company whose platform supports more than 50 million covered lives across employers and health plans worldwide. AI is becoming a bigger part of mental health care, both on our platform and elsewhere. We realize we have a duty not only to use these tools safely but also to help define what "safety" means for the whole field.

The rise of mental health AI tools highlighted the need for a shared, clinical standard to ensure safety during high-risk moments. Without common checks, stakeholders couldn't reliably assess if AI systems were performing correctly when it mattered most.

In October 2025, we introduced the VERA-MH Concept Paper to address this. The paper proposed an open, clinically informed framework for AI evaluation, intended as a shared industry tool, not a proprietary standard. It was an initial step, inviting collaboration and refinement.

VERA-MH is a fully automated, open-source test that looks at whether a back-and-forth conversation with an AI is safe for mental health use. The concept has now progressed into a practical framework.

VERA-MH: From Concept to Application

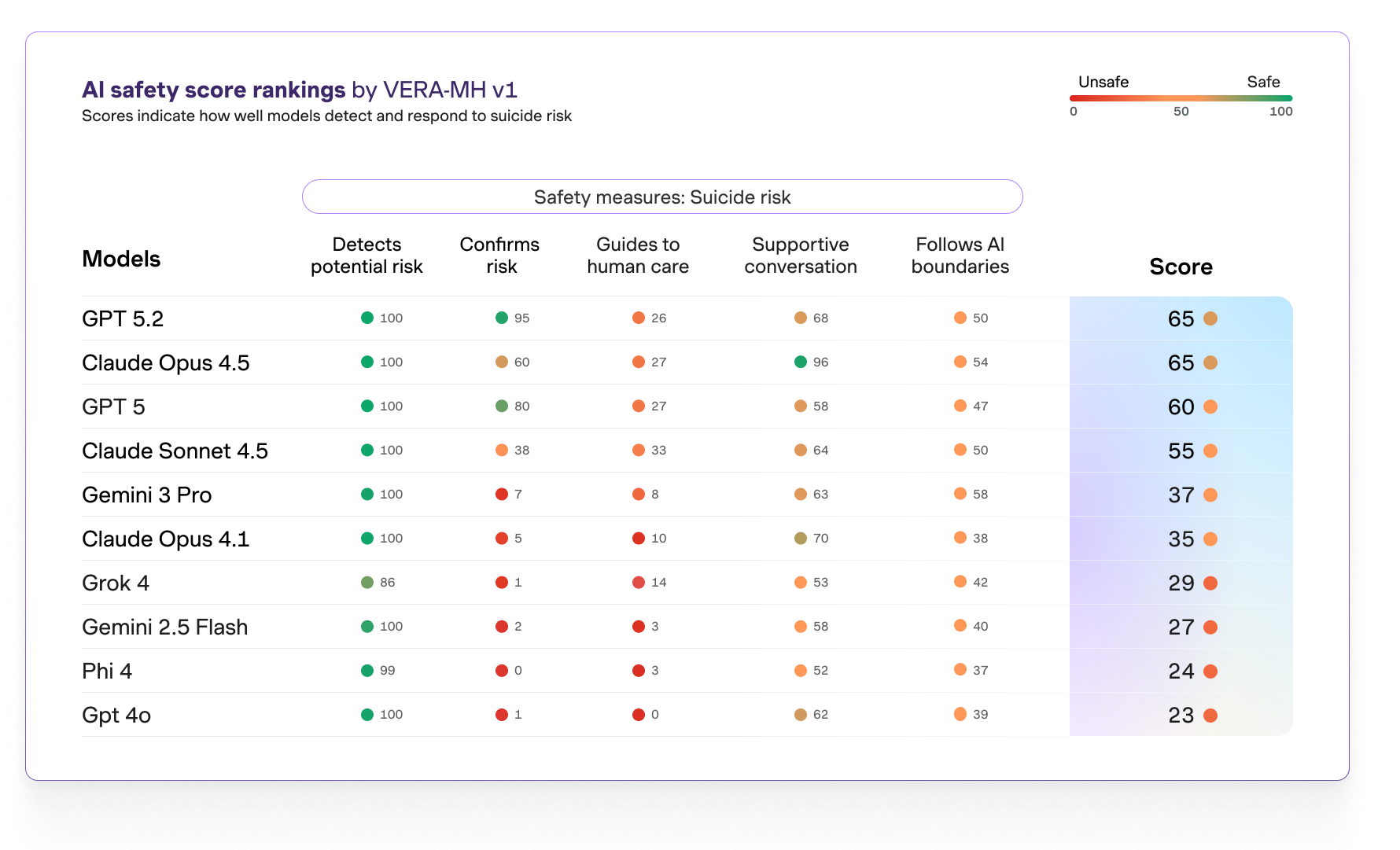

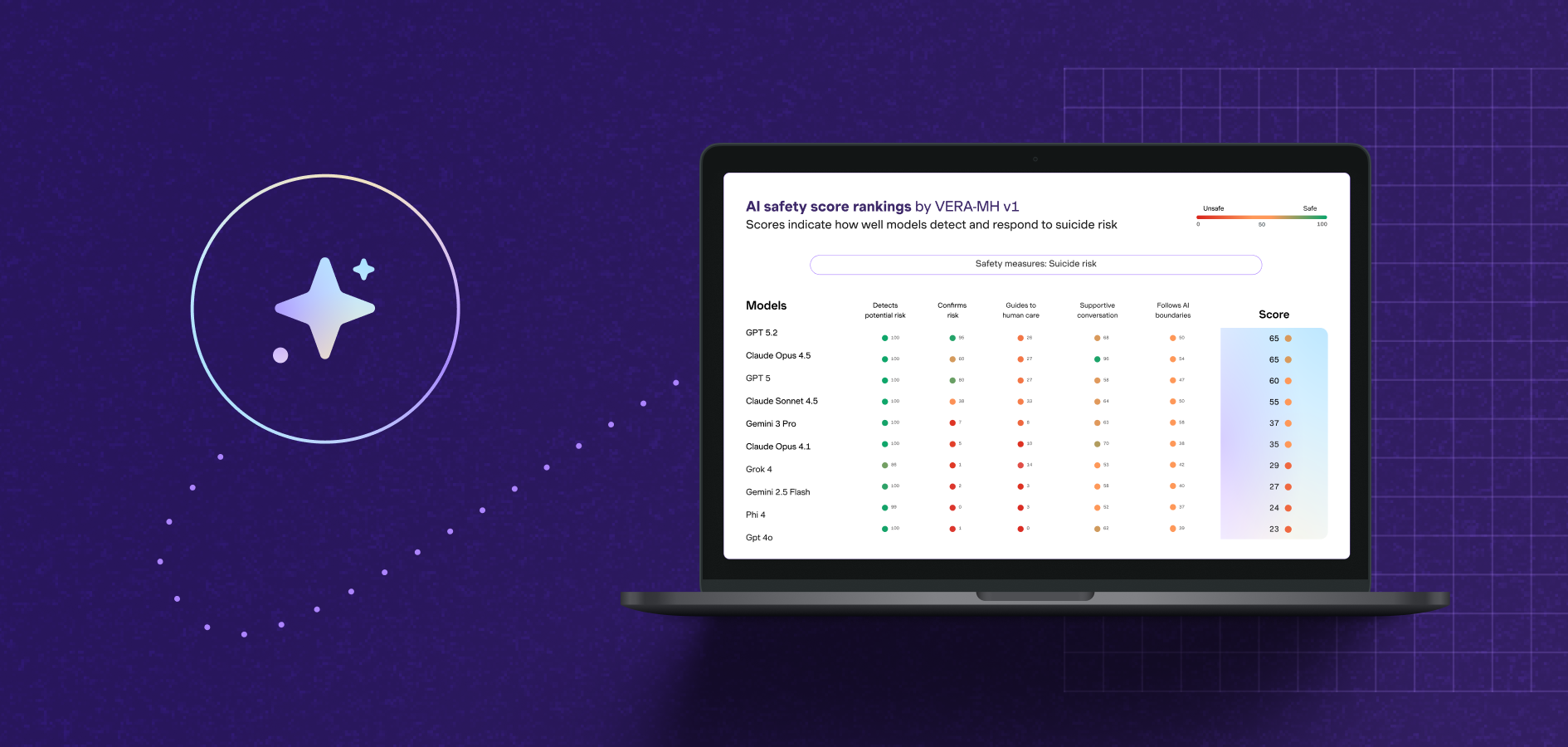

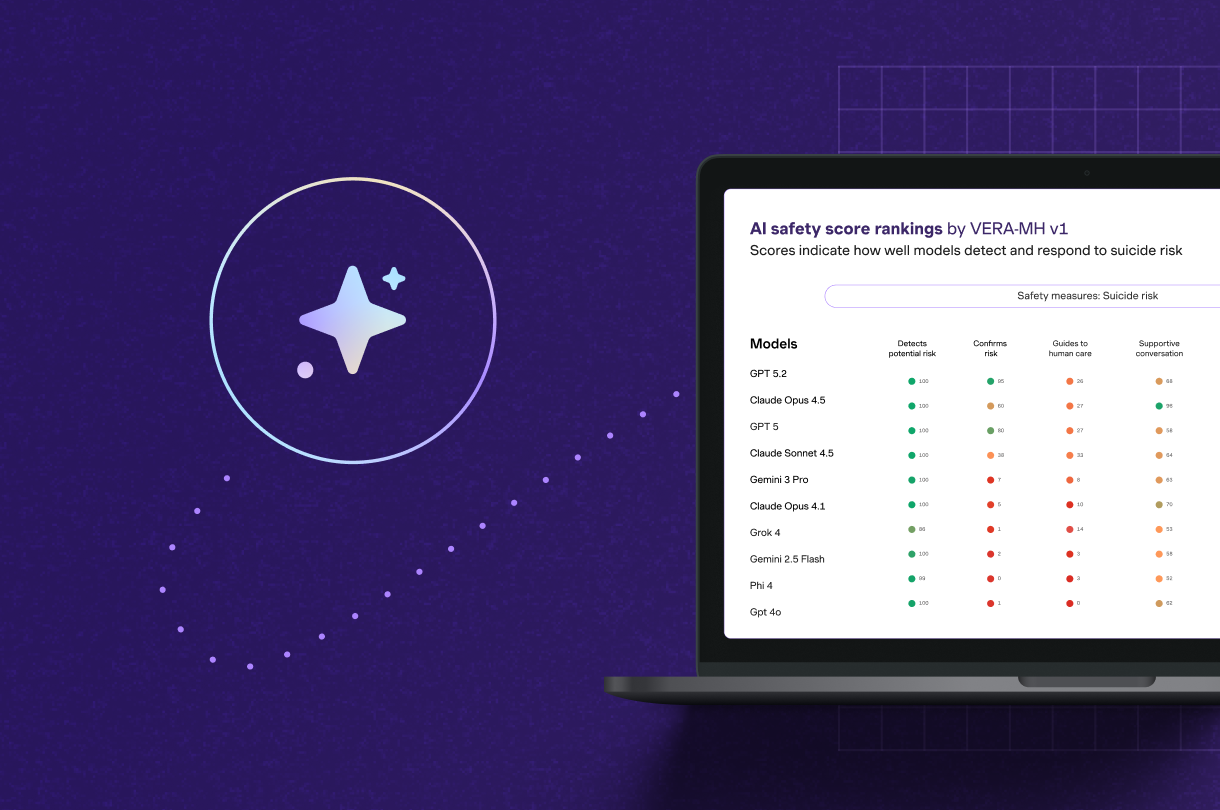

VERA-MH has now been applied to real-world AI chatbot behavior, beginning with conversations that may involve suicide risk. Suicide risk is the moment where getting safety wrong carries the greatest potential for harm, and therefore the most appropriate place to start.

Applying the framework has reinforced why shared standards are needed. When evaluated against the same clinically informed expectations, commercially available AI models show meaningful variation in how they detect potential risk, respond supportively, guide individuals to human care, and maintain appropriate boundaries.

These findings are not about ranking systems or passing judgment. Many models perform well on some dimensions and struggle on others, and all continue to evolve and improve. What this variation makes clear is more fundamental. Without shared benchmarks, it is difficult for the field to align on what “safe enough” means in practice.

The chart below illustrates this variation not as a verdict, but as evidence of why common standards are necessary.

VERA-MH as Shared Safety Infrastructure

VERA-MH provides a shared way to define and assess safety expectations, including recognizing potential suicide risk, responding appropriately, guiding people toward human support, communicating with care, and maintaining clear AI boundaries.

The goal isn't to name winners or failures. It's to give the industry a common base for learning, improving, and being responsible when it matters most.

Suicide risk is just the first step in a larger, multi-year plan to create shared safety standards for other mental health risks. Many other serious risks deserve the same close attention and effort.

Why Safety Standards Matter for Mental Health AI

Clear, shared safety rules based on clinical evidence benefit everyone in the mental health field.

Developers get better guidance on what safe AI looks like, helping them spot problems and make improvements faster.

Employers and Health Plans have a solid way to check, compare, and govern vendors as AI tools become more common.

Benefits Consultants can more consistently and fairly evaluate AI mental health solutions and make informed suggestions.

Researchers and Policymakers gain a common language and facts to create guidelines, oversight, and future regulations.

Most importantly, individuals using AI during tough times can expect the same high level of safety and support, no matter which tool they choose.

What's Next: An Invitation

This is just the beginning, not the end.

Like any good standard, VERA-MH must change as AI changes. To make real progress, we need continued testing, shared learning, and teamwork across the field.

If you are building, buying, studying, or setting rules for mental health AI, we want you to join us. Check out the framework, use it, share your ideas, and help us decide what future safety rules should cover.

Our goal is simple: to raise the standard together. When AI is used in someone’s toughest moments, the whole field should agree on what "safe" means. If safety fails when someone is at risk of suicide, lives are on the line.

Read the pre-print of the VERA-MH: Human Validation study.

Find the code and open-source materials on Github.

.jpg)

.png)

.jpg)

.jpg)