There’s a good chance your employees are turning to AI for more than just brainstorming sessions. Nearly half (48.7%) of U.S. adults have used Large Language Models (LLMs), through generative chatbots or digital health apps, for psychological support in the last year. But despite their widespread prevalence, there hasn’t been any oversight of the use of AI in mental health. Spring Health is ready to change that.

The accessibility of chatbots could transform mental health at a time when half of the world’s population is expected to experience a mental health disorder sometime in their lives. Instead of scheduling a session with a licensed mental health professional, many will seek support from AI.

But there are real risks with the use of AI in mental healthcare. Most AI chatbots are not designed to help individuals seeking this kind of support. As a result, they lack clinical oversight, regulatory guardrails, and the ability to reliably recognize and respond to signs of crisis.

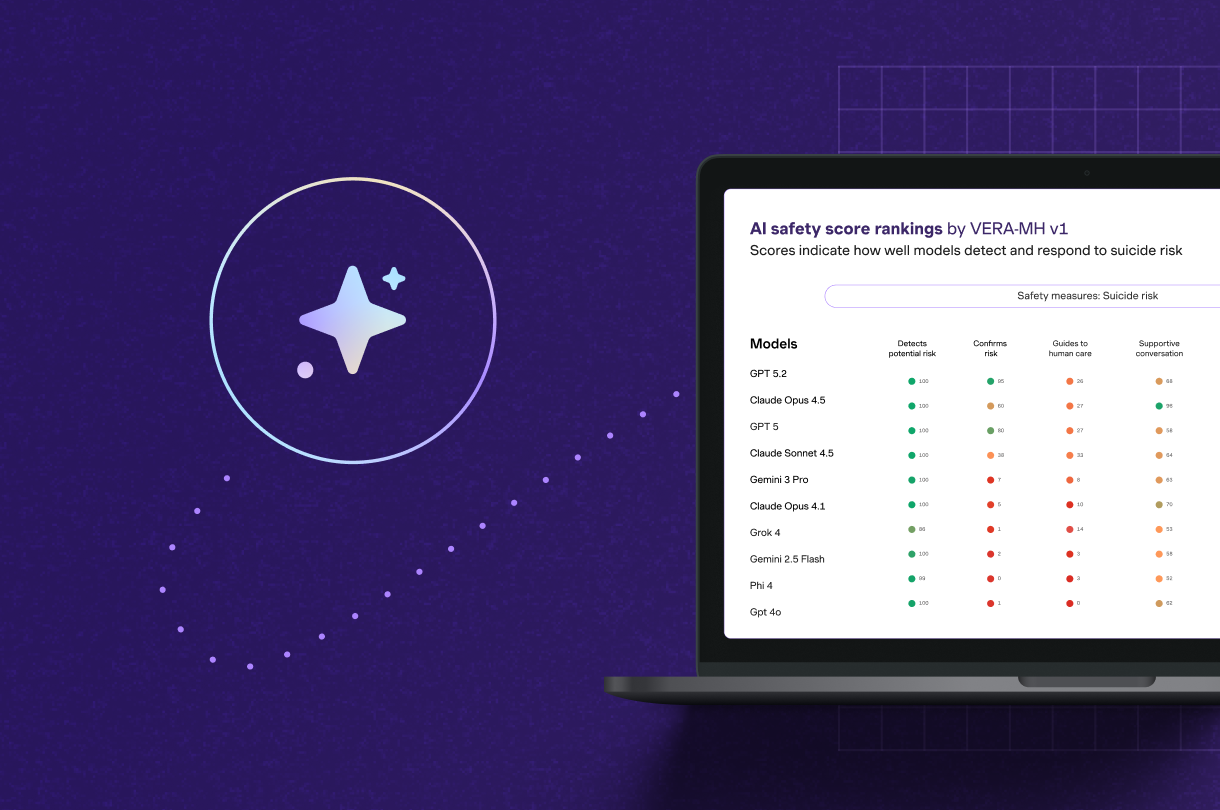

At Spring Health, we believe this moment calls for leadership. That’s why we introduced VERA-MH (Validation of Ethical and Responsible AI in Mental Health) — the first clinically grounded evaluation framework designed to assess chatbots in mental health.

Review the full concept paper.

Do you have feedback on VERA-MH? Learn more and share your thoughts with us.

Why is VERA-MH important to employers?

Navigating how and when to incorporate AI into the tools, services, and benefits you offer to your employees is top of mind for many HR leaders. Such decisions have far-ranging consequences for organizational effectiveness, culture, and business outcomes. And when it comes to mental health support, they may also impact the safety and well-being of your workforce.

AI is already reshaping mental health, with mounting stories of enormous promise, as well as potential harm. Many employees are already turning to AI tools, regardless of whether their organizations officially offer or endorse them.

For employers, this raises important questions such as:

- How does our existing mental health program use AI?

- How do we ensure our employees always have safe, clinically sound, and trustworthy mental health experiences?

VERA-MH offers a foundation for answering that question. Built through collaboration among practicing clinicians, suicide prevention experts, ethicists, and AI developers, it provides a rigorous standard for evaluating AI safety in one of the most sensitive areas of care: suicide risk.

By using VERA-MH to demonstrate the safety and efficacy of their use of AI in mental health benefits, mental health solutions signal to current and potential customers that their technology can be trusted. In turn, employers who decide to purchase employee mental health benefits can:

- Protect their workforce by ensuring that AI tools can recognize distress and connect people to appropriate help.

- Reduce organizational risk by holding AI solutions to the same level of scrutiny as other health benefits.

- Build trust by showing employees that innovation and safety go hand in hand.

Employers have worked to expand access to mental health services. VERA-MH represents the next step by ensuring that AI tools in the mental health space are held to the same ethical and clinical standards as other forms of healthcare.

Why is VERA-MH important to employees?

When someone turns to a chatbot for help, what happens next matters.

Maybe it’s 2 a.m., and a manager named Jordan types, “I’m not okay.” A compassionate, clinically grounded response can offer stability, empathy, and a clear path to real help.

VERA-MH helps ensure that AI tools provide compassionate, clinically appropriate responses. It sets out clear criteria for what safe, responsible AI in mental health looks like, evaluating whether a chatbot can:

- Recognize potential risk or suicidal language.

- Respond with empathy and validation.

- Ask appropriate, clarifying questions.

- Know when to escalate to a human clinician.

- Avoid harmful or stigmatizing language.

For employees, this means they have access to safer digital touchpoints and a more trustworthy mental health ecosystem. When someone finally reaches out—even to a chatbot—what comes back should be calm, caring, and clinically sound.

How do we know if using AI to improve mental health is safe? Watch our video below to learn more about VERA-MH.

Why is VERA-MH important to Spring Health?

Spring Health’s roots are in AI. In 2016, April Koh and Adam Chekroud founded Spring Health as a company that better matches people to the care they need using AI. From care pathways to clinical decision support, Spring Health was built around the belief that data, technology, and human-centered design can work together to deliver safe, empathetic, and effective care built on a few key principles.

Recently, we’ve deepened that commitment through two major initiatives:

- We introduced a comprehensive framework for developing and governing AI across all Spring Health products—prioritizing transparency, human oversight, and clinical validation at every step.

- We convened the AI in Mental Health Safety and Ethics Council. This coalition of independent experts across ethics, clinical science, and technology will help to ensure AI in mental health is developed responsibly and benefits the entire ecosystem.

Together, these initiatives reflect our long-standing promise: To lead with evidence, act with empathy, and ensure every innovation in mental health technology improves care and does not cause harm.

What’s next for VERA-MH?

We see VERA-MH as a living framework—one that will evolve to address new risks and opportunities as AI in healthcare matures.

Our next steps include:

- Engaging with HR leaders, clinicians, researchers, and AI developers through a 60-day Request for Comment (RFC) period to gather feedback and shape future framework iterations.

- Publishing updates and open data on our validation results to maintain transparency and trust, starting with a first version early next year incorporating market feedback.

- Expanding the framework to assess other high-risk areas, including self-harm, harm to others, and support for vulnerable groups.

The core goal will remain the same: Ensuring that technology commonly being used for mental health is providing care that meets rigorous clinical and safety standards.

We would love to hear from you

We believe this initiative requires everyone’s voice to be heard. That’s why we are inviting broad participation across HR leaders, mental health professionals, researchers, and AI developers.

Through a formal Request for Comment (RFC), we’re gathering input to strengthen VERA-MH. By contributing, we can all help set the standards that protect their workforce, reduce organizational risk, and ensure future AI adoption in mental health is done safely.

Here’s how you can stay updated and involved:

- Read the concept paper. Learn how the first version of VERA-MH works in more detail.

- Share feedback by December 20, 2025. Let us know what matters most to your organization in developing safe AI standards by giving feedback via the link below.

- Join the conversation. Share this news with your team and your network to let them know that ethical AI in mental health is a cause you care about.

Review the full concept paper.

Do you have feedback on VERA-MH? Learn more and share your thoughts with us.

If you or someone you know is in crisis

- In the U.S., contact the 988 Suicide and Crisis Lifeline: call or text 988, or chat at 988lifeline.org.

- Veterans and service members can press 1 (or text 838255) for the Veterans Crisis Line.

- Spanish-language support is available by pressing 2 or texting AYUDA to 988.

.png)

.png)

.png)

.png)

.png)