Highlights

- Discover how AI reduces mental health barriers employees face—from stigma and access gaps to wait times and poor care continuity.

- Learn how AI triage, early crisis detection, and self-guided support are transforming employee engagement with mental health benefits.

- Get a sneak peek at our latest AI safety checklist, plus Spring Health’s closed-loop, HIPAA-compliant approach to responsible AI.

The barriers employees still face

Even with modern benefits, employees continue to hit frustrating and sometimes dangerous obstacles in their mental health care journey.

Common barriers to mental health support

| Barrier | Impact on Employees |

|---|---|

| Long wait times | Employees wait 4–12+ weeks for an appointment |

| Limited access | 69% of U.S. counties lack a psychiatric mental health provider |

| Stigma or fear | 42% of employees fear job impact if they talk about mental health |

| Poor continuity of care | Repeating their story to every new provider or during every session |

| Inflexible scheduling | Shift-based, hourly, and remote workers struggle to find time to engage |

These issues can cause employees to disengage entirely—or only seek help once they reach a crisis point.

Choosing a safe AI mental health solution

Watch our webinar replay to learn what questions you should ask when evaluating your next EAP

How AI reduces mental health barriers for employees

With the right guardrails, AI can remove these barriers without replacing the human connection at the heart of care. Here's how:

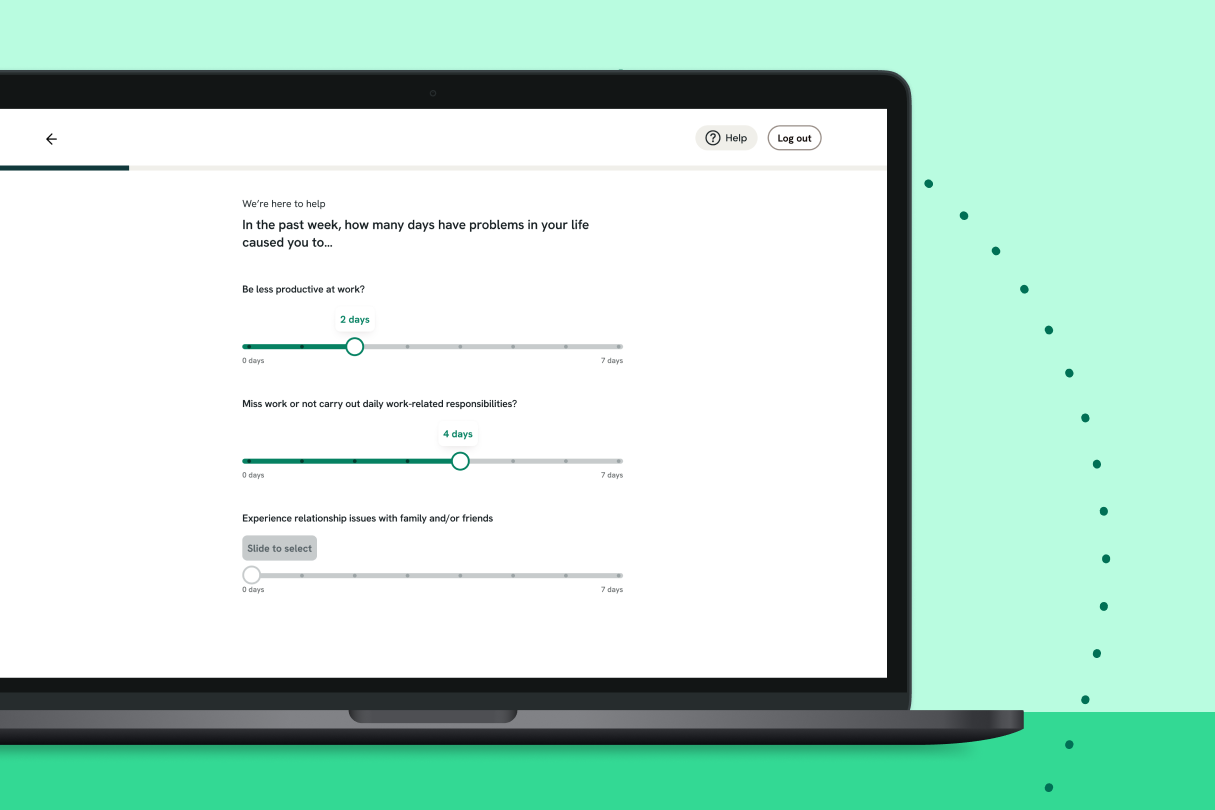

1. Smarter triage for faster access

AI can assess risk levels in real time—then direct employees to the appropriate level of care. This helps employees start with the right support the first time.

| Risk level | AI-recommended path |

|---|---|

| Low | Self-guided content or digital programs |

| Moderate | Therapy with a licensed provider |

| High | Crisis support and immediate clinician review |

This routing helps reduce bottlenecks for providers and gives employees faster, more relevant support.

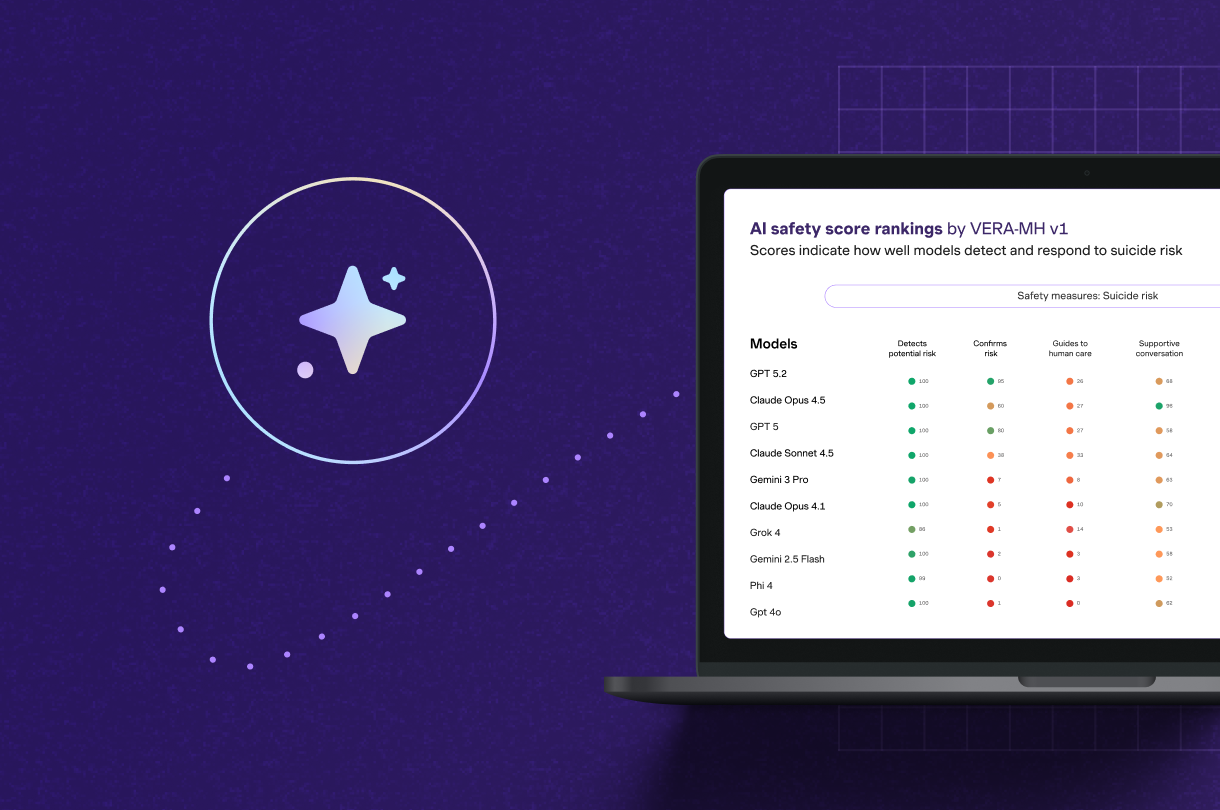

2. Early crisis detection

Many employees may not openly disclose thoughts of self-harm or severe distress.

AI can identify patterns or language that indicate elevated risk—even when it's subtle—and escalate to a human reviewer as needed.

At Spring Health, for example, our suicide risk classifier correctly flags 97% of immediate risk cases, with fallback language and outreach protocols in place for the other 3%.

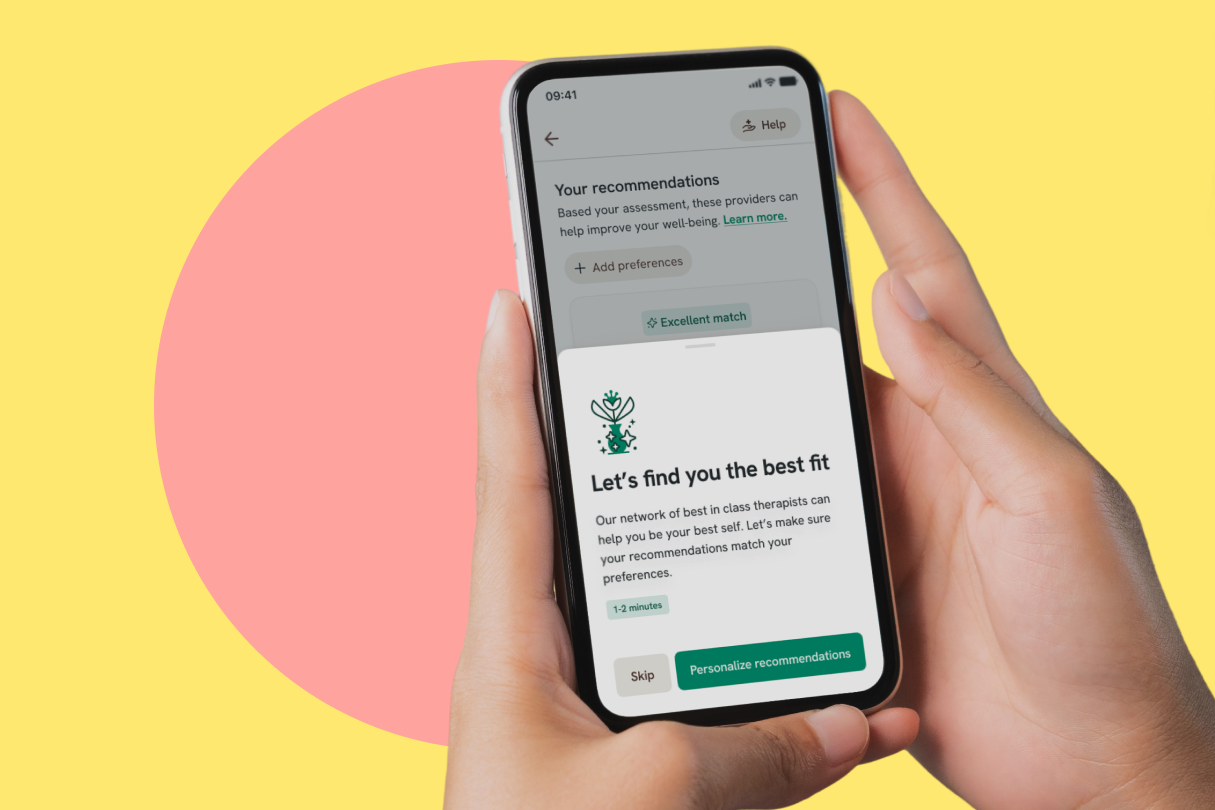

3. Self-guided, always-on support

Not every concern requires immediate therapy.

AI-powered tools like chatbots and guided programs offer employees 24/7 support for managing:

- Stress

- Anxiety

- Sleep issues

- Adjustment challenges

These tools also help reduce stigma, letting employees engage privately and on their own terms.

4. Better documentation and continuity

AI-generated summaries of sessions help therapists track progress and maintain continuity, especially when caseloads are high or provider turnover occurs. This reduces the need for employees to “start over” repeatedly and supports more consistent care.

But not all AI is created equal

While AI presents exciting opportunities, it also introduces real risks—especially when it’s not built for clinical care.

What to ask before you adopt AI in mental health

| Category | Key questions |

|---|---|

| Clinical safety | Is there a multi-layer safety framework? How is risk detection accuracy measured? |

| Privacy & security | Is data de-identified before processing? Is the system closed-loop and encrypted? |

| Compliance | Are HIPAA Business Associate Agreements in place with all vendors? |

| Transparency & trust | Can employees opt out? How is AI use explained clearly and upfront? |

| Human oversight | Who reviews high-risk responses? What’s the escalation path? |

Want a deeper dive? Download the full eBook for a detailed buyer's checklist.

AI is transforming mental health. How can you ensure it's done safely?

Do you know what to look for when evaluating AI within your mental health solution?

A responsible path forward

Spring Health’s approach to AI emphasizes safety, transparency, and privacy. Our system includes:

- A closed-loop environment: Data never goes to public models or open platforms

- De-identification at the source: Sensitive information is automatically removed before processing

- A six-layer safety framework, including input/output monitoring and clinician escalation

- A 97% accuracy rate for detecting suicidal intent—and human outreach when any concern is detected

- Full HIPAA compliance with BAAs and regulatory oversight

Responsible AI doesn’t just scale care—it earns trust by protecting the people it serves.

Download your eBook: “AI is Transforming Mental Healthcare. Here’s How to Embrace It Safely.”

Get your copy to learn:

- Where AI fits in today’s mental health strategy

- What pitfalls to watch for

- How to evaluate tools before you sign

AI is transforming mental health. How can you ensure it's done safely?

Do you know what to look for when evaluating AI within your mental health solution?

FAQ

How is employee privacy protected?

Data is de-identified, encrypted, and processed in a private, closed-loop system. We never share individual data with employers or third parties.

How do I know which vendors are truly responsible?

Ask how—not just what—they do. Our eBook includes a detailed checklist and guidance on what to look for in a mental health AI partner.

.png)

.png)

.png)

.png)