AI (artificial intelligence) is rapidly gaining traction in mental health care, and for good reason. It offers the potential to streamline care, uncover clinical insights, and broaden access to essential support.

However, alongside this promising outlook, there's a significant, often overlooked risk for HR and benefits leaders: The increasing application of off-label AI in mental health.

What is off-label AI in mental health?

"Off-label AI" refers to the application of general-purpose AI tools, such as Large Language Models (LLMs), for purposes for which they were not originally developed or clinically validated, particularly in the realm of employee mental health support.

Nearly half (48.7%) of U.S. adults have tried LLMs for psychological support in the last year. As I said on our most recent webinar, that means many of these tools are in essence being used off-label.

Employing AI in mental health safely.

Watch our webinar to learn what to look for when evaluating mental health solutions.

For example:

- An employee uses an LLM to talk through suicidal thoughts.

- A wellness app provides automated affirmations but lacks escalation protocols for risk.

- A vendor adds an “AI-powered coach” without disclosing how it handles bias and safety concerns.

These scenarios may seem harmless on the surface. But when AI is embedded in a deeply human process like mental health care, the consequences of using the wrong tool can be serious.

The risks are real and rising

General-purpose AI tools aren’t designed to handle complex mental health situations. They lack clinical safeguards, oversight, and often basic compliance protections.

There are a number of critical risks to using off-label AI, including:

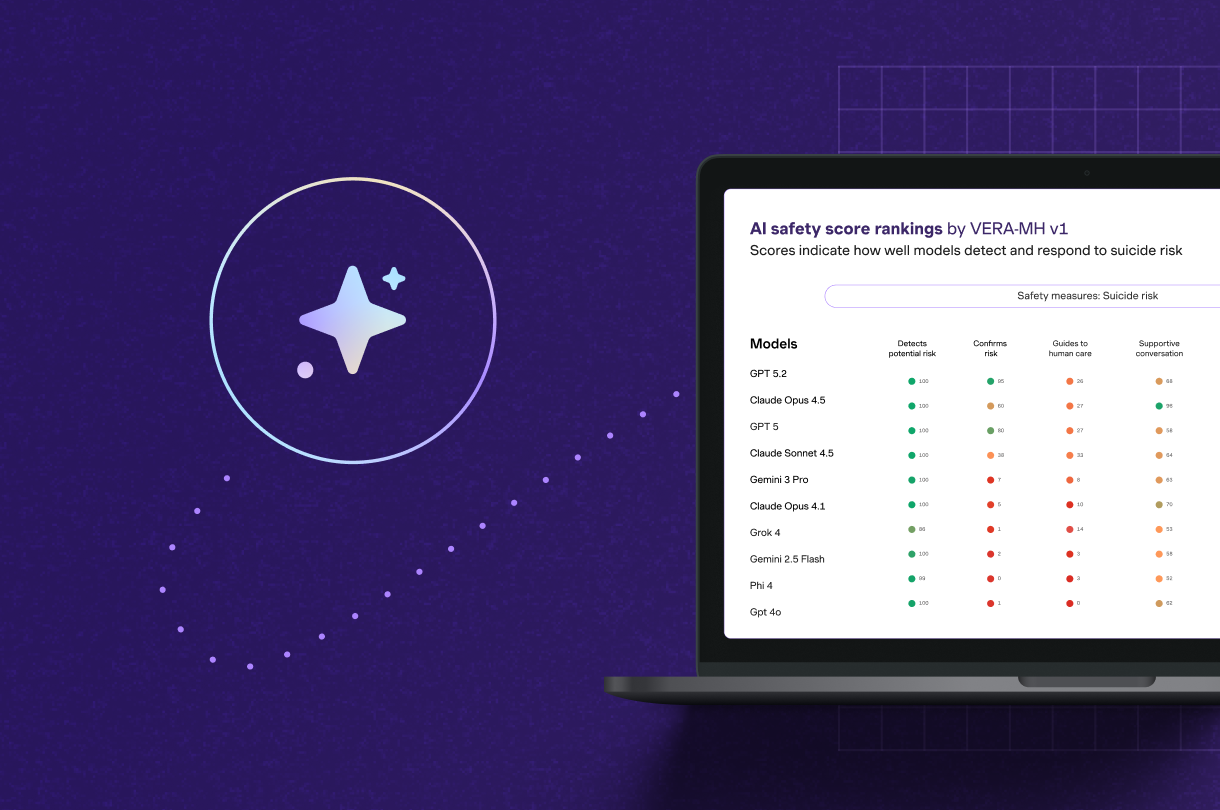

- Clinical misclassification: General-purpose AI tools may miss signs of suicidal ideation, validate disordered thinking, or fail to escalate high-risk situations to a human. Without clear safety protocols, the consequences can be severe.

- Privacy and compliance gaps: Tools not built for healthcare often lack HIPAA protection, don’t have Business Associate Agreements (BAAs), and may store or process sensitive employee data insecurely—putting both employees and employers at risk.

- Loss of trust and engagement: If AI feels invasive, confusing, or misaligned with employee needs, trust quickly erodes. And when trust breaks down, employees disengage from the very tools meant to support them.

“I think with the advent of AI, there’s so much we can do to improve mental health care, how it’s delivered, how we can personalize care for patients, how we can supercharge providers, and really drive consistent great outcomes for all patients that we try to treat in mental health care,” said Dr. Millard Brown, Spring Health’s Chief Medical Officer, during our webinar. “But we have to do it in really safe and smart ways, and first, do no harm.”

Hear more of Dr. Millard Brown's thoughts on ethical AI use below or watch the webinar replay.

Purpose-built AI is the safer path

Forward-thinking organizations are adopting purpose-built AI systems that are clinically governed and designed for safety from the ground up.

These systems:

- Use mental health-specific training data and evidence-based frameworks like CBT or ACT.

- Include real-time monitoring for signs of self-harm, risk, or crisis-level language.

- Feature multi-layered safeguards like 24/7 escalation to human clinicians, input monitoring and output safety checks.

How to spot the difference

If you’re evaluating AI-powered mental health tools, don’t stop at, “Do you use AI?”

Ask: How do you use it safely?

The below checklist provides you with a few questions you can ask of any mental health solution you’re evaluating.

| Checklist area | What to ask of a mental health solution |

|---|---|

| Clinical safety | How do you measure accuracy in detecting crisis risk? Do you have clinician oversight? |

| Privacy & security | Is the data encrypted and de-identified? Is the AI hosted in a closed-loop system? |

| Transparency & trust | Do employees know when AI is in use? Is it always opt-in? |

| Compliance | Are you HIPAA-compliant? Do you have BAAs in place with your AI vendors? |

| Human oversight | What’s the escalation protocol when something concerning is detected? |

You can safely embrace AI in mental health.

AI is transforming mental healthcare. Our eBook will help you ensure your solution is using AI safely.

Why this matters now

AI is already transforming mental health care, but it’s up to all of us to shape how. Off-label use of LLMs for psychological support is growing rapidly, even though most of these tools were never designed for clinical use.

The goal of AI in mental health is to get healing. To get outcomes. It’s not to drive dependence on the tool, not to get eyeballs on, not to just drive continued engagement.

Responsible adoption isn’t optional. It’s the only way to ensure AI improves outcomes without compromising safety, privacy, or trust.

Employing AI in mental health safely.

Watch our webinar to learn what to look for when evaluating mental health solutions.

.png)

.png)

.png)